I worked on this project with Peter Schmidt for the Unsupervised Language Learning class on my Exchange stay at University van Amsterdam.

The project was based on the article An exemplar-based approach to unsupervised parsing, Dennis ’05 . The goal: Given a sentence with POS-tags induce the parse tree. We use exemplars from gold parses and induce only binary parse trees.

More can be found in the report and the article.

Results

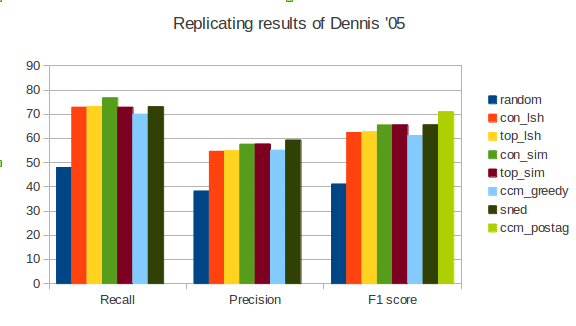

Our bars are con_sim, top_sim, con_lsh and top_lsh.

We see that from the four configurarions of with Topics/without Topics and with LSH/without LSH the with Topics without LSH achieved the best results. Moreover the results are almost the same as results of Dennis ’05 and we are really happy for that.

We were surprised that “with Topics” gived us only a very small improvement. Still, it should be considered as the algorithm is deterministic, i.e. without any random decisions.

On the other hand, we were not able to reconstruct the SNED30 result (at least we achieved similar recall values). We guess that this could be because of the parameters of the LSH function. Dennis uses 300 rules in 5 hash functions – we found this setting inferior – so we use 600 rules and 10 hash functions and therefore the number of sentences satisfying the 30 NN limit is greater. In our case 192 of 519, i.e. 37\% of sentences satisfied that condition.